One year of code review club with the William Harvey Research Institute¶

Over the past year, researchers from QMUL's William Harvey Research Institute (WHRI) have engaged on a collaborative code review club. Through this collaborative effort the group aims to peer review the computational components of their research and provide code quality assurance to all involved researchers. Additionally, the Research Software Engineering group of ITS Research has been assisting the group with knowledge transfer and by participating in the review process.

Researchers took turns to present examples of their code for others to review. The benefits of code review are nowadays undisputed and this post presents a case study of a research group's experiences.

Before diving too deeply, code review amongst the WHRI researchers had its own caveats. They are important for drawing parallels to other groups. Firstly, these researchers mainly use the R programming language for most tasks, from compute to scripting. Secondly, the scientific background of the methods used is very homogeneous, and is mainly focused around computational biology. Lastly, the code review group was self-selected to participate. Changing any of the above may have a significant effect on the outcome.

The process¶

The group met for one hour every second week of the past academic year. In the first half of each meeting, one researcher presented the basics of the background, methods, and code to be reviewed. In the second half, the reviewers of the previous week's code took turns explaining the proposed changes. These constraints meant that not everybody had their turn on being reviewed, or not everyone had to review all past codes.

To assess how well the process worked for the researchers we introduced an open-ended feedback form with no binary or mandatory questions. The goal was to capture nuance on specific things that worked or didn't work as intended for the code review. The questions were then segmented into two general categories, "reviewing others" and "being reviewed", and below is a summary of the findings.

Reviewing others¶

Everyone was invited to optionally participate in reviewing every code. That's a departure from how the usual review process, so there is an open question whether this approach worked. More specifically, did the presentations provide the needed information? Were there any technical issues, issues of time commitment, or a lack of perceived programming skill that inhibited the participants from reviewing? Was it any useful for the reviewers themselves?

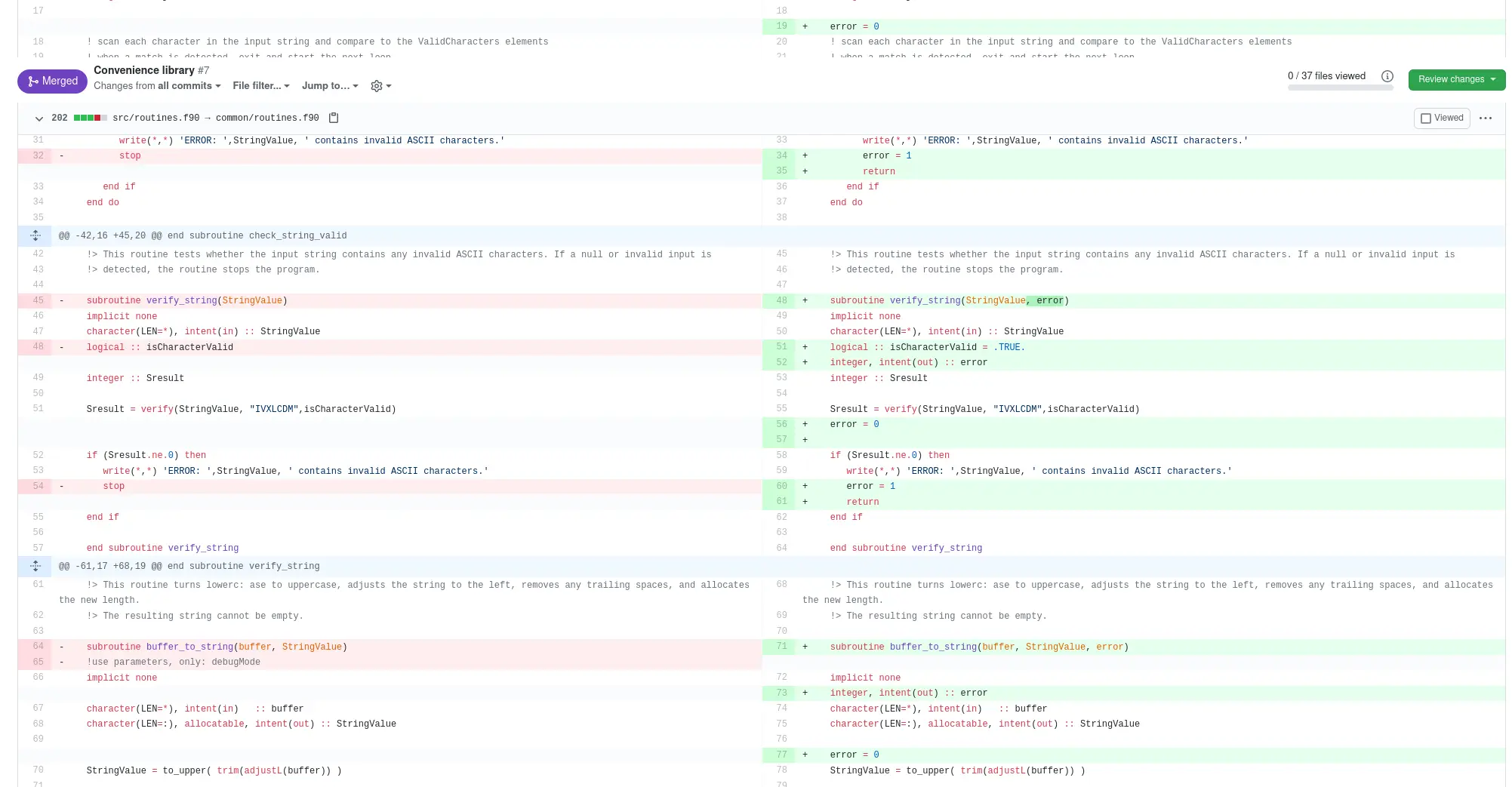

Most reviewers thought that the presentations covered the necessary parts, though a minority would prefer more time spent on the code and algorithms. The time spent on constructing a review varied significantly. The self-reported amount was between 10 minutes and 2 hours. Reviewers didn't think they got any faster in performing successive reviews. Some reviewers were discouraged from contributing to reviews because of the higher programming or algorithmic expertise needed. On the technical side, several researchers had problems with the review workflow on the QMUL GitHub. Last but not least, every reviewer thought that participating in the review process was personally useful.

Receiving reviews¶

Participating in a review as a code author is not a taught skill in the vast majority of the STEM academia. As most academics have no background in computer science or software engineering, their first experience on the receiving side of a review can be rather intimidating.

Despite that, all researchers who presented their code thought the reviews were helpful to them. The benefits cited were multiple; improved code organisation, output improvements, suggestions for future implementations, entirely new ideas, and programming bugs identified. Moreover, the code authors agreed that they were happy with the time commitment to understand the reviews and implement the proposed changes, and that it was time well-spent. Additionally, reviewers offered a wide array of areas of consideration and improvement, many times outside the authors' knowledge domain or the programming expertise. Finally, authors favoured quick summaries over long reviews, especially if specific suggestions were made and a list of changes was also provided.

The researcher's viewpoint¶

We asked one of the code review club participants to provide her personal experience on how the first year concluded. Below is a quote by Pilar Cacheiro, Research Fellow at WHRI.

As scientists we are supposed to be used to have our work reviewed by peers, but having your code subject to the scrutiny of your colleagues feels particularly intimidating. This was the main challenge to overcome when we decided to start this code review group. I think that creating a friendly environment, sharing similar domains of expertise, and having technical support have all contributed to make these code review sessions a quite successful attempt. Most of us had little to no previous experience with collaborative tools, so learning to use Git and GitHub in this setting was probably the first obstacle, but also the first achievement. I definitely got very valuable feedback on my code, but I also learned while reviewing other’s code. I particularly enjoyed the discussions that were raised about the convenience of using a particular function or approach, or how to optimise your code. Other participants may have different experiences, but these are the main lessons for me:

- Everyone can provide very valuable feedback regardless of their level of expertise, a simple "I faced this problem when trying to run the code in this version of R" is already very useful. Similarly, feedback on outputs of functions, visualisations, even when not meant to improve the code directly, may have a positive impact.

- You write better code when you know it's going to be seen by others. You make an effort to annotate the code, to be more consistent with the style. And this in itself is already a great benefit.

- My code has always improved and there’s always been something new I learned after sharing my code. I realised how much I don’t know and how much room for improvement there is. Also how hard it is to get rid of some (bad) old habits. One final, brief point, on a less optimistic node, it was difficult at times to get people to engage in the reviews. Some of the reasons, as explained above, include time constraints, lack of confidence about one's ability to contribute, and problems with GitHub.

I have no doubt the code review club has proven to be an amazing learning opportunity. I hope it continues to grow and evolve. It's very rewarding to see this type of initiative working.

Takeaways¶

The code review club had a successful first year. The researchers of the WHRI group were introduced to a collaborative review process that positively affected both the code authors and the reviewers. The RSE group supported the effort with introductory courses of relevant technologies and tools, like bash, git, and GitHub.

The group continues its fortnightly meet-ups for this academic year. The RSE group has introduced a new collaborative review tool, Crucible, to facilitate conversations during reviews. We are hoping to expand this service to other interested groups; if you belong to one of those, please reach out to us.