Speeding up and Parallelising R packages (using Rcpp and C++)¶

Polytomous variables can be used to model data that has two or more possible outcomes. For example, a survey with multiple-choice questions is polytomous. The R package, poLCA, does statistical clustering of polytomous variables. For example, grouping together survey results that are similar to each other.

A researcher came for help with a problem when using poLCA with a large data set, containing about half a million data points, each with about two hundred responses. On Apocrita, the program would run beyond the 10 days time limit with no results. This may be a sign the package is very inefficient. Fixing this problem would require investigating the source code of poLCA and assessing what needs to be done for the package to improve.

Optimisation aims to improve the efficiency of a piece of code so it can run faster. Techniques for optimisation can include, for example, programming in a faster language, improving cache efficiency or parallelising parts of an algorithm. With these optimisation techniques, the package can run within an hour or quicker when using multiple threads on a node.

In this blog post, I will go through some modifications I made to the the package which may be useful to readers, including avoiding expensive calculations and the use of C++.

You may not need optional outputs¶

The output of poLCA can be summarised into three items: estimated probabilities, standard errors and goodness-of-fit statistics. Calculating the estimated probabilities serves the purpose of the package as it describes the results of clustering of the data. However, the other two items are optional as they describe uncertainty in the fitted model and how good the fit is.

In the

documentation of poLCA,

there's an argument called calc.se which does the following:

calc.seLogical, indicating whetherpoLCAshould calculate the standard errors of the estimated class-conditional response probabilities and mixing proportions. The default isTRUE...[cont.]

We found that setting calc.se to FALSE made the program run faster as it

avoids performing expensive calculations. This is important to consider when the

user does not need these results in the first place.

However, it was a different story for the goodness-of-fit statistics. In the paper Linzer, D.A. and Lewis, J.B.,2011, from the authors of poLCA, they specified that:

Like the AIC and BIC, [the goodness-of-fit statistics] are outputted automatically after calling

poLCA

This means that the goodness-of-fit statistics, no matter if you need them or not, will be calculated. When I manually and interactively profiled each section of the code, I noticed that it could spend days on this calculation. Some of my first modifications were to make these calculations optional, giving the user an option to avoid such an expensive calculation.

In summary, optimisation can include removing unwanted and unnecessary parts of the code, such as expensive optional calculations. It would be a waste of resources to calculate something but to then be thrown away immediately by the user.

R vs C++¶

R is an interpreted language, meaning it executes instructions directly line by line without the use of a compiler. This gives users a lot of flexibility in running R code. For example, a user in RStudio can highlight lines of R code in any order and execute them. C++ is a compiled language, it requires a compiler to compile, or in other words translate, C++ code into machine code. Running the resulting machine code is one reason why C++ code is generally much faster than R code.

However, not everyone knows C++ and the expertise varies from community to community. C++ is considered a lower level than R with a steeper learning curve. Because of this, it is important to assess whether the time and investment spent in learning and writing in C++ would pay off. For example, why spend a week writing in C++ when you could run the existing R code for an hour longer?

A 𝜋 approximation example¶

Optimisation using C++ and Rcpp can be demonstrated by implementing a truncation of an infinite series. An example would be calculating an approximation to 𝜋 using

There exist better algorithms to calculate 𝜋 but we use this example

only to demonstrate optimisation using C++. Let n be the number of terms to sum

in the truncated series.

Implementation in R may look like the following code.

pi_approx_1 <- function(n) {

pi_4 <- 0

sign <- 1

for (i in seq_len(n)) {

pi_4 <- pi_4 + sign / (2*i-1)

sign <- sign * -1

}

return(4*pi_4)

}

Another way in R is to avoid using for loops by using sapply as shown below.

pi_approx_2 <- function(n) {

return(4*sum(sapply(seq_len(n), function(i){(-1)^(i-1) / (2*i-1)})))

}

The function pi_approx_1() can be translated to C++. To start, create

a file called pi_approx.cc which contains the code below.

#include <Rcpp.h>

using namespace Rcpp;

// [[Rcpp::export]]

double PiApprox(int n) {

double pi_4 = 0;

double sign = 1;

for (int i=1; i<=n; i++) {

pi_4 += sign / (2*i-1);

sign *= -1;

}

return 4*pi_4;

}

For this small example, the R and C++ code are quite similar but there are quite

a few differences. For example, C++ is statically typed, thus the type for each

variable has to be specified such as double and int. Another difference is

the use of some shorthand assignments, for example, sign *= -1; is the same

as sign = sign * -1;.

The comment // [[Rcpp::export]] tells Rcpp to compile the C++ function

PiApprox() such that it is accessible by R. The following R code will

compile and call the C++ function PiApprox() given an n.

Rcpp::sourceCpp("pi_approx.cc")

PiApprox(n)

Profiling the functions pi_approx_1(), pi_approx_2() and PiApprox() in R

can be done using system.time(). The code below does this for 10 million

summation terms.

n <- 1E7

cat("Time taken when using a for loop (s)\n")

print(system.time(pi_approx_1(n)))

cat("Time taken when using sapply (s)\n")

print(system.time(pi_approx_2(n)))

cat("Time taken when using Rcpp (s)\n")

print(system.time(PiApprox(n)))

A sample profile is provided below which was run on a laptop.

user system elapsed

Time taken when using a for loop (s) 0.447 0.000 0.446

Time taken when using sapply (s) 29.308 0.118 29.411

Time taken when using Rcpp (s) 0.015 0.000 0.015

Remarkably, the C++ version of the 𝜋 approximation is at least about 30 times faster than the R version!

Re-Implementing Components in C++¶

An involved part of optimising poLCA was re-implementing the EM algorithm, the underlying algorithm for poLCA. The calculations of the goodness-of-fit statistics were also re-implemented. I've used the standard C++ libraries, the C++ library Armadillo and the R package RcppArmadillo in my C++ implementation. These libraries contain all the fundamental tools needed for most statistical algorithms.

The standard library <random>

provides

pseudo-random number generations for a lot of common distributions. This was

used to generate uniformly random starting probabilities for the EM algorithm.

The standard library

<thread> provided the means

for multi-thread work. It was used so that each thread can use the EM algorithm

for different starting values, all simultaneously. However, a problem can occur

when multiple threads modify the same variable in memory at the same time. This

is called a data race. The standard library

<mutex> was used which provides

locks to prevent data races.

Armadillo provides tools needed to do linear algebra in C++, for example, matrix multiplication, Cholesky decomposition and solving linear equations. Armadillo uses LAPACK under the hood but it provides a higher-level interface.

Finally, the R package RcppArmadillo provides a way for data from R to be read and modified by C++. It can also be integrated into R packages so that users of R can compile and run the C++ code. This makes it a versatile tool for writing high-performance code to be used in R.

Benchmarks¶

We benchmarked our optimised C++ poLCA package against the original R code using

a ddy node. A sub-sample

of 10⁴ data points were used. We used the package to fit 10 classes using

32 different initial values (by setting nrep=32).

For the original R code, we compared the settings calc.se=TRUE and

calc.se=FALSE which turn the calculations of the optional standard errors on

or off respectively. For our C++ code, we set calc.se=FALSE and ran

using a different number of threads. We repeated the benchmark 5 times but found

insignificant variation, thus we present the mean over the 5 readings.

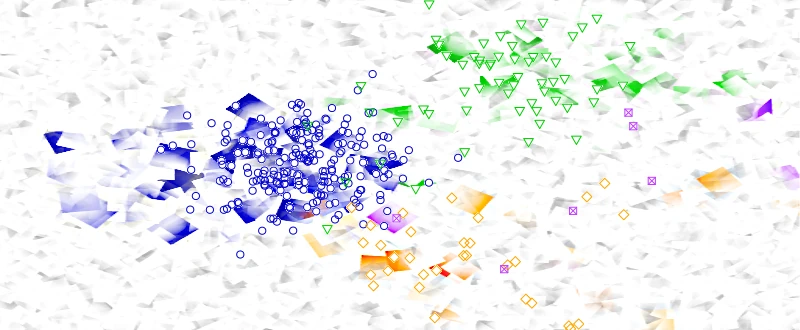

Figure 1: Benchmarks comparing R and C++ versions of poLCA. For the R versions,

we compared setting calc.se to TRUE and FALSE

The benchmarks comparing the R and C++ versions of poLCA are shown in Figure 1.

A speed-up was observed by setting calc.se=FALSE. This demonstrates that

something simple like turning off these optional outputs saved significant time.

It was fantastic to observe a 10x speedup for the C++ version.

Figure 2: Benchmarks comparing the C++ version of poLCA using a different number of threads

For the C++ version, we compare using a different number of threads as shown in Figure 2. Each thread was initialised with different values but perform the same algorithm. This is an example of an embarrassingly parallel problem because the threads very rarely interact with each other. As a result, it scales well for more threads as shown in the benchmarks.

For the full data set containing half a million data points, we set nrep=48

and used all the 48 threads on

a ddy node. We found the

algorithm can be completed in about 40 minutes. This is a fabulous improvement!

Summary¶

This was a summary of some of the techniques I've used to improve code. I would encourage you to look into some of the open-source software you are using yourself and perhaps identify some flaws or improvements. Something small like modifying poLCA to prevent it from calculating optional outputs made a significant improvement.

Re-implementing some of the components in C++ took time and effort but it paid off because the package can run on Apocrita within an hour with results.

Compared to R, C++ is quite a low-level language, but the standard C++ libraries and Armadillo provide the basic building blocks for a lot of statistical algorithms. Together with RcppArmadillo, they can provide high-performance tools for users in R.

Our re-implementation of poLCA is available on GitHub.

References¶

- Linzer, D.A. and Lewis, J.B., 2011. poLCA: An R package for polytomous variable latent class analysis. Journal of Statistical Software, 42, pp.1-29. [link]

- Armadillo - C++ library for linear algebra & scientific computing [link]

- CRAN - poLCA: Polytomous Variable Latent Class Analysis [link]

- CRAN - RcppArmadillo: 'Rcpp' Integration for the 'Armadillo' Templated Linear Algebra Library [link]

- C++ Reference - <mutex> [link]

- C++ Reference - <random> [link]

- C++ Reference - <thread> [link]