A look at the Grace Hopper superchip

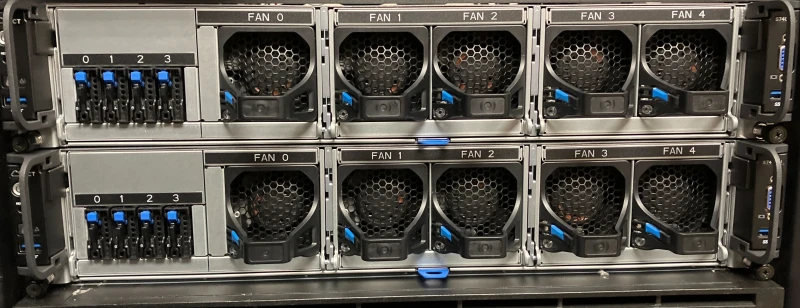

NVIDIA recently announced the GH200 Grace Hopper Superchip which is a combined CPU+GPU with high memory bandwidth, designed for AI workloads. These will also feature in the forthcoming Isambard AI National supercomputer. We were offered the chance to pick up a couple of these new servers for a very attractive launch price.

The CPU is a 72-core ARM-based Grace processor, which is connected to an H100 GPU via the NVIDIA chip-2-chip interconnect, which delivers 7x the bandwidth of PCIe Gen5, commonly found in our other GPU nodes. This effectively allows the GPU to seamlessly access the system memory. This datasheet contains further details.

Since this new chip offers a lot of potential for accelerating AI workloads, particularly for workloads requiring large amounts of GPU RAM or involving a lot of memory copying between the host and the GPU, we've been running a few tests to see how this compares with the alternatives.